Cybernews: Inside the war on child exploitation

One case early this year involved the St. Rochus daycare center in Kerpen, Germany, which started promoting and encouraging sexual exploration among nursery students. This agenda encompassed several other daycares in Germany.

Write your awesome label here.

Even Apple Inc. tried taking up the fight against child sexual abuse material (CSAM) and lost when, back in August 2021, the tech giant announced a controversial new software-driven initiative that could have revolutionized CSAM detection and prevention on iPhones before the material could be uploaded to the iCloud.

Comparing image hashes with a known CSAM database of image hashes would have empowered the company to identify the illegal material without actually having access to view a user’s Photo Album. Positive matches would have been vetted by Apple and then provided to the National Center for Missing and Exploited Children (NCMEC). The detection software was announced to maintain privacy at its core.

Big Tech needs to step up

While the tech industry and privacy advocates believed the technology would be too invasive if implemented in the wild, the hesitancy offers little to nothing in the way of a solution, thus leaving the epidemic to continue to proliferate. Especially in the wake of the Jeffrey Epstein case, which implicated major global political actors in sex abuse and trafficking.There were disruptions among Apple’s child safety executives concerning trust and safety.

Erik Neuenschwander, director of privacy and child safety at Apple, said: “How can users be assured that a tool for one type of surveillance has not been reconfigured to surveil for other content such as political activity or religious persecution?”It’s also curious to note that we are all still living in a post-9/11 era where privacy has been exchanged for surveillance by governments across the world. Furthermore, the privacy dynamic changed again during the COVID-19 pandemic.Most Big Tech companies monopolize and monetize user and customer data with virtually no actionable resistance from the public until egregious privacy invasions unfold. For this reason, I find it hard to understand their reluctance to support Apple’s initiative, especially since Apple has a history of opposing governmental encroachments upon its technology.

To drive the nail in the coffin, Meta (formerly Facebook), the social media giant, is currently battling a lawsuit from Attorney General Raúl Torrez of Santa Fe, New Mexico, for apparently creating the perfect environment for a thriving child sexual exploitation marketplace, which its algorithms seemingly do not detect in the slightest.The lawsuit also states that Meta “proactively served and directed [children] to egregious, sexually explicit images through recommended users and posts – even where the child has expressed no interest in this content.”

In all fairness, during the 2nd quarter of 2023, Meta and its sister company Instagram sent 3.7 million NCMEC Cybertip Reports for child sexual exploitation. Nevertheless, the question we all should be asking is why are predators so comfortable sharing highly illegal debased content like this on Meta’s platforms?It boils down to a lack of consequences on behalf of the predator and the companies that refuse to police it. This has everything to do with its algorithms, where it’s arguably a case of someone being asleep at the wheel.

This has created the perfect environment for predators. Moreover, Meta’s announcement to roll out end-to-end encryption for their Messenger platform only two days after being served the lawsuit strikes a defiant note – despite the epidemic festering on its platforms. Don’t even get me started on the latest trend in AI image generation to create child sex imagery. It has, by all definitions, been a war imposed on all fronts. Policymakers and legislators have arguably arrived too late. It’s my personal opinion that no organization or government, especially the United States, has the infrastructure to stop the spread of this epidemic unless they approach it with the same determination and resources as their war on terror.

Statistics

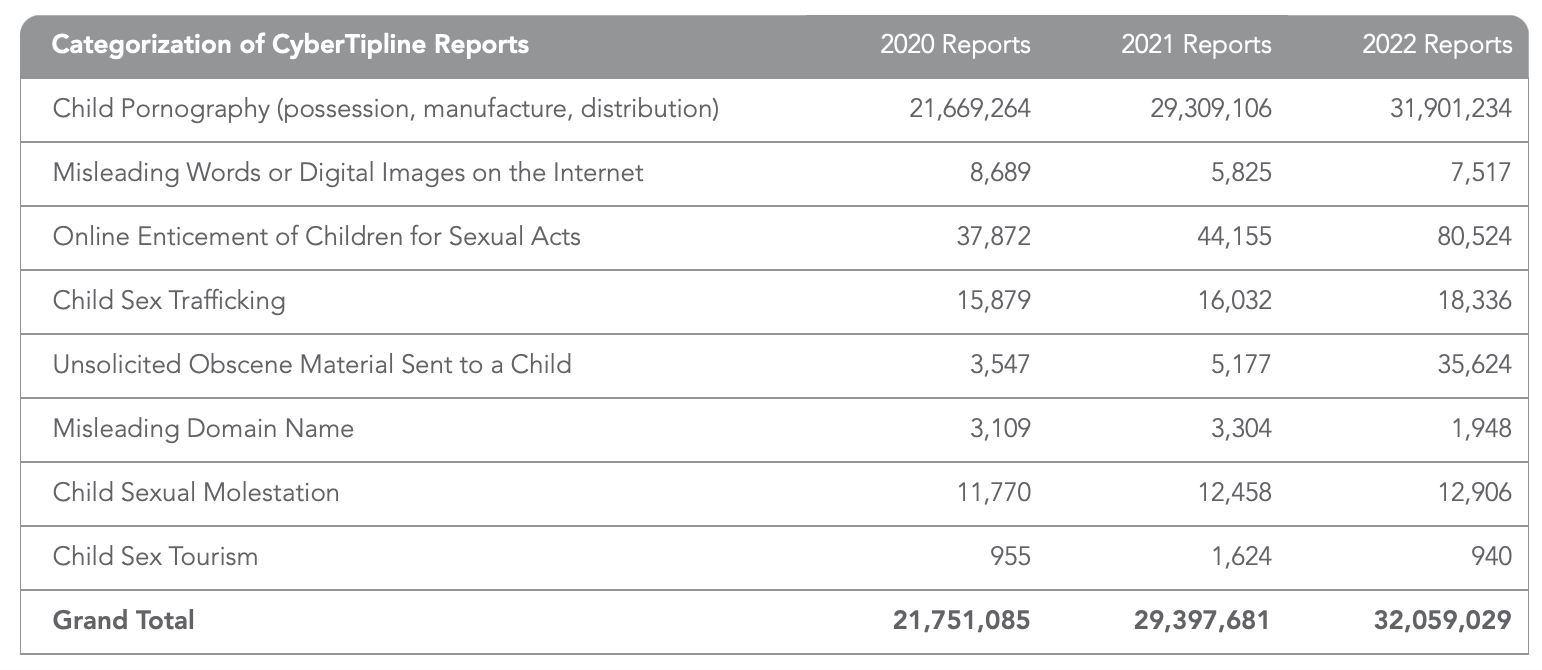

The following statistics do not reflect present statistics because the NCMEC has yet to publish the current figures. However, the NCMEC reported that over 99.5% of reports made to its CyberTipline in 2022 concerned incidents of suspected child CSAM.

Comparing image hashes with a known CSAM database of image hashes would have empowered the company to identify the illegal material without actually having access to view a user’s Photo Album. Positive matches would have been vetted by Apple and then provided to the National Center for Missing and Exploited Children (NCMEC). The detection software was announced to maintain privacy at its core.

Big Tech needs to step up

While the tech industry and privacy advocates believed the technology would be too invasive if implemented in the wild, the hesitancy offers little to nothing in the way of a solution, thus leaving the epidemic to continue to proliferate. Especially in the wake of the Jeffrey Epstein case, which implicated major global political actors in sex abuse and trafficking.There were disruptions among Apple’s child safety executives concerning trust and safety.

Erik Neuenschwander, director of privacy and child safety at Apple, said: “How can users be assured that a tool for one type of surveillance has not been reconfigured to surveil for other content such as political activity or religious persecution?”It’s also curious to note that we are all still living in a post-9/11 era where privacy has been exchanged for surveillance by governments across the world. Furthermore, the privacy dynamic changed again during the COVID-19 pandemic.Most Big Tech companies monopolize and monetize user and customer data with virtually no actionable resistance from the public until egregious privacy invasions unfold. For this reason, I find it hard to understand their reluctance to support Apple’s initiative, especially since Apple has a history of opposing governmental encroachments upon its technology.

To drive the nail in the coffin, Meta (formerly Facebook), the social media giant, is currently battling a lawsuit from Attorney General Raúl Torrez of Santa Fe, New Mexico, for apparently creating the perfect environment for a thriving child sexual exploitation marketplace, which its algorithms seemingly do not detect in the slightest.The lawsuit also states that Meta “proactively served and directed [children] to egregious, sexually explicit images through recommended users and posts – even where the child has expressed no interest in this content.”

In all fairness, during the 2nd quarter of 2023, Meta and its sister company Instagram sent 3.7 million NCMEC Cybertip Reports for child sexual exploitation. Nevertheless, the question we all should be asking is why are predators so comfortable sharing highly illegal debased content like this on Meta’s platforms?It boils down to a lack of consequences on behalf of the predator and the companies that refuse to police it. This has everything to do with its algorithms, where it’s arguably a case of someone being asleep at the wheel.

This has created the perfect environment for predators. Moreover, Meta’s announcement to roll out end-to-end encryption for their Messenger platform only two days after being served the lawsuit strikes a defiant note – despite the epidemic festering on its platforms. Don’t even get me started on the latest trend in AI image generation to create child sex imagery. It has, by all definitions, been a war imposed on all fronts. Policymakers and legislators have arguably arrived too late. It’s my personal opinion that no organization or government, especially the United States, has the infrastructure to stop the spread of this epidemic unless they approach it with the same determination and resources as their war on terror.

Statistics

The following statistics do not reflect present statistics because the NCMEC has yet to publish the current figures. However, the NCMEC reported that over 99.5% of reports made to its CyberTipline in 2022 concerned incidents of suspected child CSAM.